When Tesla emphasized the functional defects of FSD software, it mentioned some corner cases, such as: "Static objects, such as road debris, emergency vehicles, construction areas, complex intersections, any obstructions, adverse weather, and dense traffic. , Ghost probes, motorcycles that do not follow traffic regulations, etc.

These problems, from the perspective of R&D, are various unresolved needs or bugs, but from the perspective of consumers, these problems mean that FSD software is extremely dangerous.

These problems can all be solved with lidar. Taking Surestar's C-Fans series products as an example, these factors were considered at the beginning of the design.

1. High resolution (0.1°×0.1°)

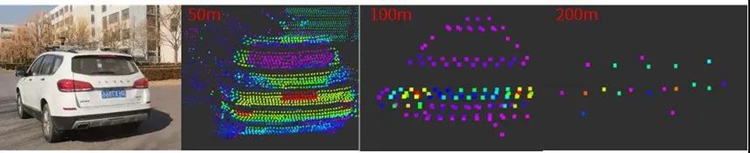

In the process of urban highway driving, pedestrians who do not follow the traffic rules in the distance, and vehicles accidentally moving backwards on highways, high-resolution Lidar can solve the various problems encountered by current millimeter-wave radar and video recognition detection. The decision planning system provides stable sensory input. Although the real-time lidar data is a sparse point cloud, the C-Fans256 line with its ultra-high resolution of 0.1°×0.1° can still achieve a grid density of 0.4m×0.4m at a distance of 200m. For a car with a width of 1.8 m. For an ordinary SUV with a height of 1.8m, a 4×4 dot matrix can still be seen at 200m.

Figure 1 C-Fans256 vehicle measured point cloud

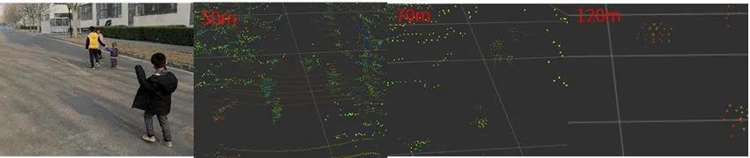

Figure 2 C-Fans256 measured point cloud for children(Height & 1.1m)

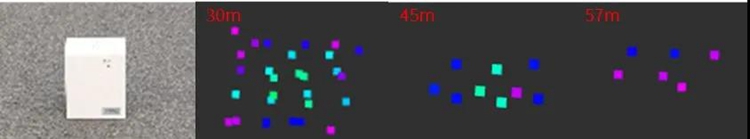

Figure 3 C-Fans256 measured point cloud for small obstacles (20cm×20cm×20cm)

High resolution corresponds to high grid density, which can realize farther and smaller obstacle detection. Under complex urban road conditions, you can find distant details as soon as possible. Under high-speed driving, you can detect the movement or abnormality of distant vehicles in advance. , This detection capability means higher security.

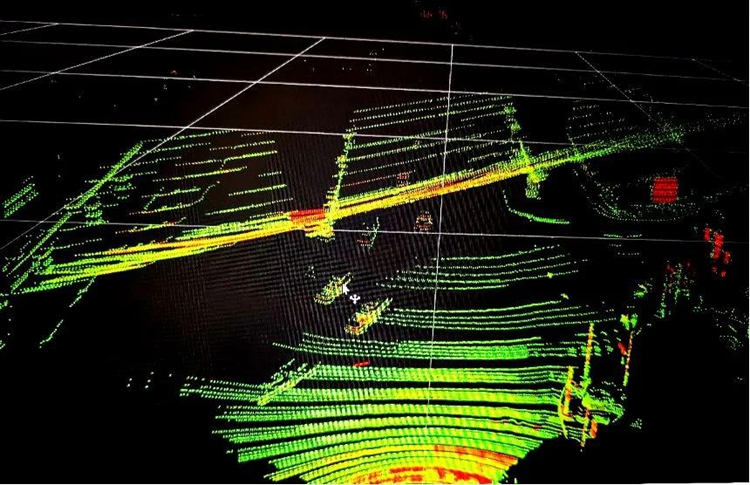

2. Large field of view (150°×30°)

Unprotected left turn at intersections and complex intersection scenes in urban areas, large FOV can bring rich perception information to autonomous vehicles, especially when completing U-turn movements, the larger the field of view is required, the better, provided by C-Fans256 With a 150°×30° FOV, it can provide a wide sensing range when the vehicle turns left or U-turn, and improves the safety of the automatic driving system.

Figure 4 C-Fans256 point cloud

3. High frame rate (80hz)

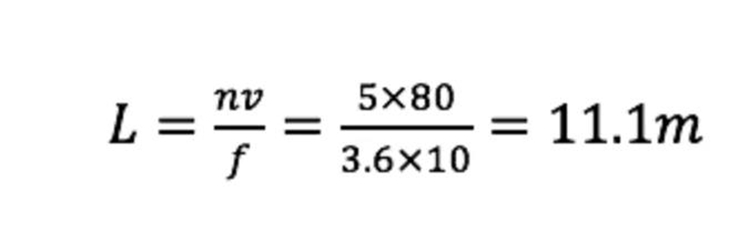

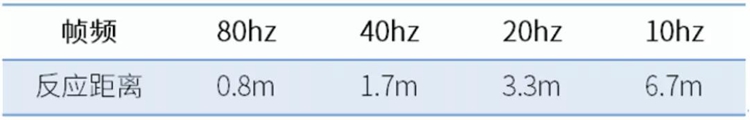

In emergencies, high-speed merging into the main road, high-speed sudden braking and other scenarios, the precise and rapid direct contour measurement capability of lidar can help autonomous vehicles respond within 100 milliseconds. The key indicator at this time is the frame rate. If the ghost probe or a car that suddenly brakes suddenly, the relative speed is 80km/h. If the radar frame rate is 10hz, the tracking algorithm has completed the calculation in 5 frames, which means the vehicle’s response distance is:

From the time the accident occurred to the system began to make a judgment, the relative distance between the two was reduced by 11.1m, and then the braking distance of the vehicle was included, which obviously means an increase in the risk factor.

Figure 5 Vehicle response distance under different frame rates (80km/h)

This is one of the main reasons why most autonomous vehicles are operating in low-to-medium-speed and low-dynamic scenarios at this stage.In order to improve the perception ability of the autonomous driving system as much as possible, C-Fans256 has increased its frame rate to the 80hz level unheard of in the industry, hoping to contribute its strength to the realization of unmanned driving in high-speed and high-dynamic and complex environments.

The 80hz high frame rate brings smooth and fast dynamic detection. In a real traffic environment, the dynamic detection time of unmanned vehicles can be shortened from the order of hundreds of milliseconds to the order of ten milliseconds, thereby bringing higher safety.

4. Grayscale recognition

Figure 8 Lane line recognition effect at night

The L2 scene library must be a subset of the L3 scene library, such as LCC lane centering assist, ALC automatic lane change assist, ACC full-speed adaptive cruise, ATC adaptive curve cruise, LDW lane departure, etc. The use of lidar can enhance automatic driving Robustness of the system at night, tunnel entrances and exits, etc.

These scenes mainly rely on the sensor's accurate detection of lane lines and road shoulders, which puts forward requirements for the gray-scale recognition level of lidar, which requires accurate and precise gray-scale recognition.

C-Fans-256 provides 8bit grayscale resolution, which can realize clear lane line recognition all-weather and improve the night perception ability of the unmanned driving system.(www.isurestar.net)