"Perception, decision-making, and execution" among the three major elements of autonomous driving, "perception" is undoubtedly the most important one.

If an autonomous vehicle wants to replace the driver to take over the operation of the vehicle, it must have a stronger perception than human beings. If accurate perception cannot be achieved, decision-making and execution are impossible. The realization of safe, reliable and accurate perception cannot be accomplished by a single product or technology alone.

At this stage, L3 autopilot models are being mass-produced and put on sale, which really opens the door to high-end autopilot. Whether it is car companies, sensor manufacturers or technology giants, they all believe that only by applying sensor fusion can they meet the perception needs of high-end autonomous vehicles.

Today, Xiaohui will take everyone to take a look at which sensor fusion solution is the best choice for autonomous driving!

To achieve precise perception, you cannot rely on "single fight"

The importance of sensors for self-driving cars does not need to be repeated. Among the three major elements of autonomous driving, perception is the basis for decision-making and execution, and it is also the most critical piece of the puzzle in the process of autonomous driving in the full sense of realization.

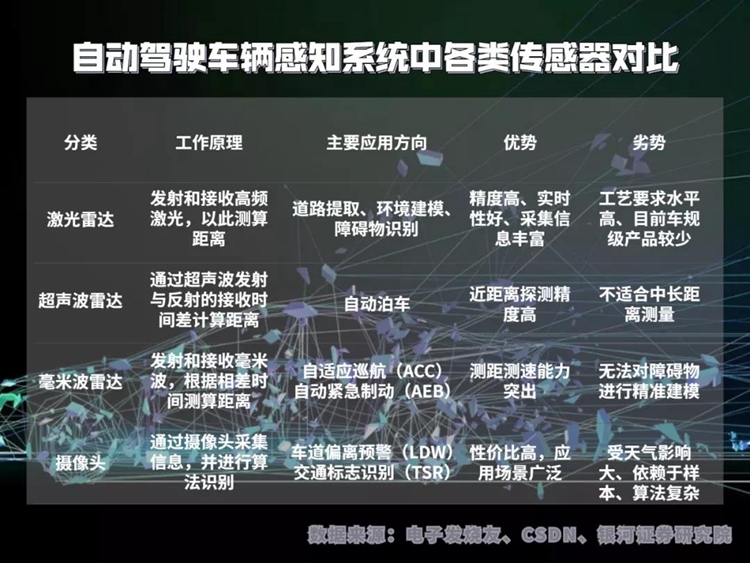

However, the realization of safe, reliable and accurate perception cannot be achieved by a certain type of sensor alone. As the so-called "Sometimes a foot may prove short while an inch may prove long", the current mainstream sensors are not the "hexagonal warriors" that are fully capable of autonomous vehicle sensing tasks alone.

Lidar has outstanding advantages such as high accuracy, long range measurement, good real-time performance, and rich collection of information. In recent years, it has also made great progress in wiring harness, miniaturization, compliance with vehicle regulations, and cost control. However, when detecting distant objects, the position information obtained by lidar is often sparse. Relying on the difference of high-frequency laser reflectivity to distinguish obstacles from materials, it is slightly insufficient in the complex real driving environment.

The millimeter wave radar technology is mature, but because the millimeter wave is significantly affected by the attenuation and absorption of the atmosphere, the millimeter wave radar is unable to perform accurate and continuous modeling of obstacles in the dynamic process of vehicle driving.

Although the camera technology is also very mature and has a high resolution, it has a limited field of view and can only focus on a limited field of view. In addition, the data or images it obtains are two-dimensional, without depth value information, and cannot accurately reflect the relative distance of different objects within the sensing range.

The camera can only focus on a limited field of view

Ultrasonic radar detection accuracy is extremely high, the detection distance is very short, and the transmission and reception of ultrasonic waves are more susceptible to weather conditions than millimeter waves. In a high-speed environment, the ultrasonic ranging error will increase due to the low speed of the detection medium.

Although there are also "fundamentalists" like Tesla, who always adhere to the line of pure visual perception. But on the whole, solutions that integrate different types of sensors are the most popular in the autonomous driving industry.

The "standard answer" for sensor fusion

However, it is also quite difficult to implement fusion applications for different types of sensors. Some sensors are inherently difficult to fusion, and some fusions between sensors are not worthwhile.

For example, between lidar and ultrasonic sensors and millimeter wave radars, although they detect different media, their working principles are similar, and lidar is far superior to the other two sensors in terms of detection performance.

Take C-Fans-256, the world’s first 256-line car-gauge solid-state lidar officially released by Surestar not long ago: C-Fans series products are the first domestic front-mounted lidars with complete car-gauge design as a series. The latest product in China, C-Fans-256 has a resolution of 0.1°x0.1°. With its omni-directional scanning field of view, it can easily realize large-scale and long-distance area monitoring, and autonomous vehicles such as turning left without protection Provide a wide field of vision in difficult scenes, so as to take care of vehicles or pedestrians on the right.

The world's first 256-beam automotive-grade solid-state lidar C-Fans-256

The ultra-high frame rate of the sports camera level gives this lidar a powerful field of view and the ability to capture abnormal events, whether it is detecting obstacles, or ghost probes, high-speed merging into the main road, high-speed sudden braking and automatic driving difficult scenes. It has a good performance underneath, which is more suitable for high-speed, dynamic and complex real driving environment.With 8bit grayscale resolution, C-Fans-256 realizes all-weather and clear lane line recognition.

At present, the evolved lidar has been widely recognized as the core essential sensor in autonomous driving with its comprehensive and outstanding performance. If the three are fused together, then the result we get is likely to be "1+1+1=1", which cannot reflect the advantages of ultrasonic sensors and millimeter-wave radars.

Although millimeter-wave radar can effectively compensate for the problem that the original data collected by the camera does not have depth value information, the target resolution of millimeter-wave radar is very low, and the size and contour of the target cannot even be determined in some scenarios. See" question. This makes it impossible to achieve low-level and in-depth integration between the two, and more often they can only be used in the form of "simplified integration" to solve some target speed measurement or trajectory tracking problems.

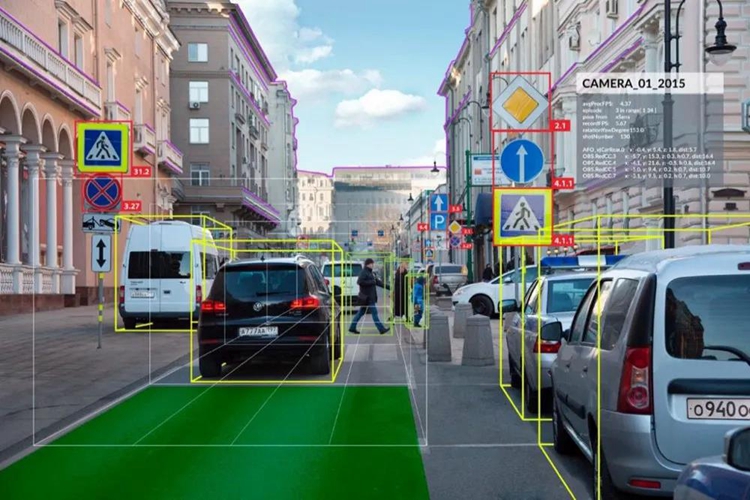

Whether it is the vast majority of L3 autopilot models currently on the market or the higher-level autopilot solutions that have been released, they have chosen a perception solution based on "lidar + camera" and assisted by other environmental sensors.

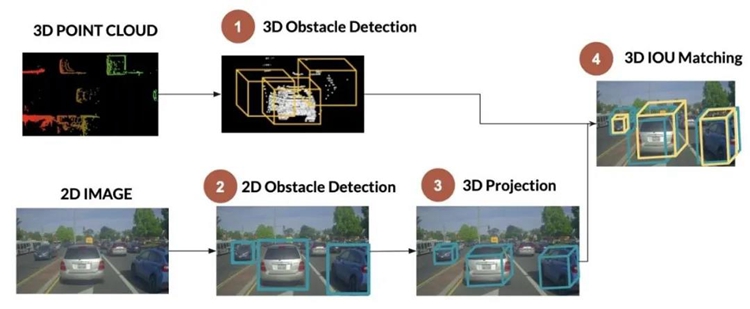

A current mainstream "lidar + camera" fusion scheme

Why among all kinds of sensors, whether it is car companies or technology giants, they all focus their attention on lidar and cameras?

In general, it is because the two can be well integrated to achieve the ultimate goal of "learning from each other's strengths", so that the autonomous driving system can actually take the "long" and make up for all the "shorts." It can be called the "standard answer" for sensor fusion in autonomous driving perception systems.

Lidar + Camera = Future

Lidar is hailed as the “eye of autonomous driving” by the industry. In terms of perception performance alone, it is the best sensor of all types required by an autonomous driving system. The lidar emits and receives high-frequency lasers to obtain position information, which allows it to achieve extremely high ranging accuracy and resistance to environmental interference. Compared with other 3D sensors, the range of lidar has obvious advantages.

Lidar has excellent sensing capabilities

The image-level ultra-high-resolution solid-state lidar C-Fans-256 developed by Surestar can accurately capture the position and point information of vehicles and pedestrians at a distance of 200m, and can accurately identify cube obstacles with a side length of 20cm. The effective distance is also up to It's nearly 60m.

C-Fans-256 also achieves a power consumption of less than 16W, and has high vehicle compliance compliance with a total of 31 tests in 9 categories of ISO16750, and can work stably and normally in harsh environments such as severe cold and heat, high temperature and humidity, rugged bumps, etc. . In addition, another product in the same series, C-Fans-32, is currently the only lidar product that has passed the "intrinsically safe" certification.

As an image sensor that has been around for a long time, the camera has a relatively low cost. Not only the related industry chain, but the camera is also very mature in technology. In addition, the camera has a high resolution, and the dense depth information estimate at a long distance can be obtained through algorithm processing.

Application of camera in driverless car

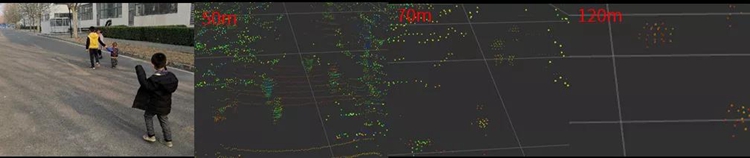

Under the premise of completing the calibration and synchronization of the lidar and the camera, the point cloud of the lidar can be calibrated and projected on the image plane of the camera to form a relatively sparse but very accurate depth map. Combined with the image algorithm processing results, the autonomous driving perception system It is possible to obtain accurate and dense depth information data with almost no dead ends, so as to realize the accurate grasp of the relative distance, position profile, and moving speed of obstacles in a large detection range.

For lidar, the camera can not only smooth out the influence of the slightly sparse local point cloud when detecting long-distance targets, but also enhance the resolution of different obstacles with the same material and similar positions through the camera's high-resolution real-scene image.

The existence of lidar greatly improves the problem of poor depth estimation accuracy of the camera as a passive detection sensor, and significantly improves the stability and robustness of the entire sensing system. The "chemical reaction" produced by the combination of the two allows the performance of the autonomous driving perception system to evolve and achieve true precise perception.

Lidar + camera fusion (data source: KITTI data set)

Although there are many issues waiting for people to explore breakthroughs on the road of the application of lidar and camera fusion, such as "how to better project the point cloud to the image", "how to ensure that the matching is true and effective", and "the accuracy of feature tracking" How to further improve" and so on.

However, as L3 self-driving cars are on the market one after another, today, as the lidar track continues to catch fire, not only car companies, but veteran lidar manufacturers like Surestar, who have been deeply involved in product research and development for more than ten years, have also begun in-depth exploration of the integration of lidar and cameras.I believe that it does not take long for the "perfect integration" of LiDAR and camera to become a reality. Let us get closer to a new future where autonomous driving is mature and popular. (www.isurestar.net)